Note: The full source-code for this project is available here [source].

This is a follow-up post to Homebrew Voxel Engine, in which I briefly describe my implementation of an OpenGL based voxel engine as a new environment for my task-bots.

The task bots use a set of primitive tasks and an intelligent task queue system to (hopefully) create complex behavior. With this general behavior model, it becomes easy to define new behaviors sets and create a diverse group of agents to simulate an ecosystem. Think of it as a modular NPC ‘AI’ system.

This is the first major update post on the task system since I presented the original concept, but I won’t be covering the basic structure in this post, simply the additions and improvements.

One running theme for this project is: EVERYTHING IS A TASK (as far as I can make it a task). And I like it that way. Everything in the simulation is therefore by default agent-driven.

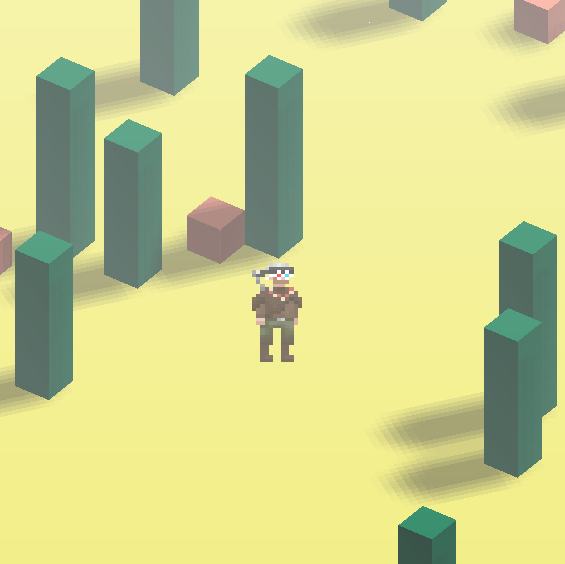

Switching to 3D, I decided to theme the simulation again (like the insect theme in 2D), and I settled for “westward expansion”, hence the new code-name for this project “Territory”. I hope you enjoy! Be prepared for lots of nice videos below of the new bots in action.

Bots 2.0

The bots have experienced a number of significant improvements to allow for more interesting behavior, more control and more robustness.

The rough list of improvements is as follows:

- 3D path finding

- Improved memory data structure

- Tasks have animations and emit sounds

- Decision making and interrupts

- Simulation control panel

- Point-and-click task assignments

- Resource hierarchy and inventory system

- New task primitives!

In the following blog post, I want to go into more depth about why / how these improvements were implemented and what they mean for the bots. I will be following along the with code samples, that you can look into more detail yourself on my GitHub.

General Improvements

One of the first things I had to do was visualize the bots. To do this, I commissioned some sprite art from my brother, who did an excellent job creating a trapper model!

(Approximate) Path-finding in 3D

The next thing I worked on once I got them to render at their position was to re-implement the walk task, so they could stroll around and I could see them in action.

This meant adapting path-finding to a 3D environment. Luckily, this wasn’t to complicated, and I just had to redefine what the search neighborhood was, as I was already using A* by Justin-Heyes Jones. This is lightning fast, even for a hundred bots doing concurrent path-finding!

Bots are given a “range” vector which determines their symmetric “reach” in all three spatial dimensions. This is useful for tasks, such as breaking and placing blocks, as well as object interactions.

The path-finder was then modified to allow “approximate” path-finding, meaning that finding a location within reach of the target also returns success. If the search terminates without a result, there is no accessible position in range.

This is useful because of the way tasks are parameterized.

Example: A bot should destroy a block at a specific position. To do that, the bot needs to move within range of the target.

I could write an algorithm that finds an adjacent free-space (by comparing the neighborhood) and tell the bot to go there first. This means doing extra calculations though, without the guarantee that the space is even accessible.

The simpler approach is to specify the block position itself as the target position for both the walk and the destroy task (as it will be valid for both), and let the approximate path finding try to find a position in range.

Improved Memory Structure

Bots would store a set of information in their memory in the form of an object containing “tags”. The contents of the tags in a memory are thereby considered “correlated”.

This can be pictured as a state vector which can be locally determined for a bot, containing tags for position, block types, bot IDs, path-finding accessibility, times, etc.

I noticed many similarities between the arguments that are passed to every task and what bots store in their memory, so I created a “State” class to act as the state vector. This is now what is stored in their memory and passed to tasks.

This single data structure represents the form of correlated information in the bot’s mind, and what they use to inform their actions. Bots thus implicitly store task parameters in their memory, and can use their memory more directly when applying it to tasks.

Example: When searching for resources, bots execute a look task to store spatial information within view-distance in their memory. They can then query their memories for matches to the resource they are searching for, and plug the memory (almost) directly into the walk task as the parameter!

Task Animations and Audio

One of the nice additions through the new engine is the possibility to animate the bots.

Since their sprites are visualized through textured quads, I created an animation sprite-sheet for them, indexed by the current animation frame and an animation ID.

A task can specify an associated animation, and a number of frames which should be executed. Animations can also be given a translation matrix which is applied to the sprites quad every animation frame, which leads to smoother animations between grid points.

The doAnimationFrame() method cycles iterates the frame index cyclically, and when the number of frames is equal to the number of specified frames, it returns true.

bool Task::perform(World &world, Population &population, Audio &audio){

//Change the Name and Execute the Task

population.bots[botID].task = name;

if(initFlag){

//Set the animation and play the sound associated with task

if(sound != SOUND_NONE) audio.unprocessed.push_back(sound);

population.bots[botID].sprite.setAnimation(animation, translate);

}

if(population.bots[botID].sprite.doAnimationFrame()){

if((*this.*handle)(world, population, audio, args)){

return true;

}

}

//Task was executed but needs to be repeated, set initFlag

initFlag = false;

return false;

}Additionally, a task can specify a sound-bite that should be played the first time that the task is executed.

The sound bite’s enumerator is added to an unprocessed stack that is kept track of by an audio class. Every tick the audio class then checks for any new sound requests, and plays them accordingly from the main function.

Overall this has the nice effect that tasks can now “take time” through animations, by only physically realizing the effect of the task once the animation is complete.

Other Improvements

Due to the change to a large 3D world, I now only load a subset of the world data into virtual memory at any moment.

Bots that are not within render distance of the camera do not execute tasks because the spatial information they need is simply not loaded into memory.

This is an issue I want to tackle in the future, but this will require significant thought.

Bots have also been given a flag that specifies whether they are “dead” or not, which also stops their task execution and rendering. This is currently implemented mostly to debug, in case a bot is spazzing out and is preventing the others from being productive.

Task System Improvements

Now for the interesting part!

Queue Handling

I alraeady had a standard loop structure for handling the sub-queue of a task queue. I decided to wrap all that functionality into a function called handleQueue().

//Handle the Queue

bool Task::handleQueue(World &world, Population &population, Audio &audio){

//If the Queue isn't empty, handle top task

if(!queue.empty()){

//Get the Top Task

Task newtask = queue.back();

queue.pop_back();

//Perform the Task and Put it back on when successful

if(!newtask.perform(world, population, audio)){

//We need to repeat the animation

queue.push_back(newtask);

}

//Pass the arguments

if(!queue.empty() && queue.back().pass){

queue.back().args = newtask.args;

}

//Task was performed successfully, we leave it off.

return false;

}

//Queue is empty, thereby we have been completed.

initFlag = true;

return true;

}This also handles initial flag setting and argument passing.

I realized that it would be useful if tasks can use the state argument of the previous task (i.e. its output) as their input. To facilitate this, I defined a “pass” Boolean, which says whether a task in the sub-queue attempts to take the state of the previous task at the end of its execution as its input.

This is now the most general form of how sub-queues are executed. Definition of new super-tasks which contain sub-queues is now very simple:

//Example - How to Construct a High-Level Task

bool Task::example(World &world, Population &population, Audio &audio, State &_args){

//Check

if(initFlag){

Task null("Example", botID, &Task::null); //Construct the Task "null"

null.animation = 0; //Set the Task's Metadata

null.sound = SOUND_NONE;

//...

Task null2("Other Example", botID, &Task::null);

null2.animation = 1;

null2.sound = SOUND_NONE;

null2.pass = true;

//...

//Add more tasks...

//Add to queue in reverse order...

queue.push_back(null2);

queue.push_back(null);

}

//Handle the Queue appropriately.

return handleQueue(world, population, audio);

}Decision Making

Bots should be able to autonomously decide their actions. I came up with what I think is a pretty good concept for constructing a list of tasks from generally stated goals (e.g. “don’t be dead, don’t be hungry”), but the system has not been implemented yet and I am still working on a prototype.

When bots run out of tasks to perform, they newly default to a “decide” task that then allocates new tasks for them.

The concept for the decision making was to give the bots an extra set of memory called the “subconscious”. Here, before and after they execute every task they generate a local State object and store it, so that they are capable of associating a task with a cause and effect relationship.

The decision making process would then consist of:

- Take a general State object (or multiple) as the generic goal (with an arbitrary degree of specificity).

- Use subconscious memory to construct potential lists of tasks that will transform the current state to a goal state.

- Use the bots preferences to evaluate some cost function over the set of tasks to decide the most feasible pathway to the goal.

Bots can have multiple generally stated goals, and can generate a preferred task pathway for each one, and then additionally evaluate what they want to do, based on an urgency score or similar prioritization.

Example: If they have work to do, but are very tired or very thirsty, they might decide that those things take priority.

An interesting side effect of this system is that bots would only construct certain pathways if they “know” how to do it. This naturally leads to ideas such as “learning” how to do things, e.g. from other bots sharing their experiences of cause and effect.

Originally, I thought I could state this as an optimization problem in some vector space, using the elements of the subconscious as base vectors for traversing the space.

The non-metric form of the state vector makes this very difficult to formulate, and I have been able to implement the necessary code framework around this, but the actual generation of a task list from the beginning and end state has eluded me.

Example: Have wood in inventory. This could be done either by chopping wood, buying wood, stealing wood, or any other set of actions that will ultimately lead to the goal state.

One reason why this is difficult is that a task might require a specific item to execute (chopping wood requires an axe), locking the starting position of the base vector in this space to a specific subspace of the vector space. I find it very difficult to wrap my head around this, and how one might optimize under these strange, non-linear constraints.

Currently, the decide task does nothing, but changing the content allows you to dictate their “default behavior”.

bool Task::decide(World &world, Population &population, Audio &audio, State &_args){

if(initFlag){

//Listen!

Task listen("Listen to surroundings.", botID, &Task::listen);

listen.perform(world, population, audio);

initFlag = false;

}

//Check if we have mandates to go

Task *masterTask = new Task("Default Task", botID, &Task::Dummy);

population.bots[botID].current = masterTask;

return false;

}This definitely needs more focus in the future.

Task Interrupts

Bots need to be able to not only act according to their internal state, but react to impulses. To implement this, bots are given an “interrupt” flag, which can be set by calling a tryInterrupt() function. This makes the bot stop what they are doing and execute a decide task at the next tick.

//Per Tick Executor Task

void Bot::executeTask(World &world, Population &population, Audio &audio){

//Check for interrupt!

if(interrupt){

//Reevaluate Mandates, so we can respond

Task *decide = new Task("Handle Interrupt", ID, &Task::decide);

current = decide;

interrupt = false;

}

else{

//Execute Current Task, upon success decide again

if((current->perform)(world, population, audio)){

Task *decide = new Task("Decide on Action", ID, &Task::decide);

current = decide;

}

}

}This is useful for a variety of things, including responding to changes in their environment (a block just fell on their head) or communication between bots.

Bots are additionally given a short-term memory which can be pictured as “sensory information”. This is “public” memory and can be written to from any source in proximity, but is small and easily overwritten. Setting the interrupt flag will trigger a “listen” task that writes the sensory short-term memory to long-term memory.

This way, bots will only ‘hear’ things if they are listening, and will ignore most impulses from the outside unless their attention is called to it.

The tryInterrupt() function currently always succeeds, but could easily be expanded in the future to take into account things like distance from a noise, whether a bot is deaf, whether they are focused / hard to distract, etc.

Additionally, the act of interrupting another bot was of course wrapped into a task.

bool Task::interrupt(World &world, Population &population, Audio &audio, State &_args){

//Attempt to set an interrupt on a different bot (persistently)

if(population.bots[botID].tryInterrupt(_args)){ //!!!!Note this uses botID

return true;

}

return false;

}Communication Pipeline

The main application of the interrupt flag and sensory information is to allow bots to communicate information with each other. A number of communication tasks are easily defined that take advantage of the system:

- Tell – Write a memory to another bots short-term memory and interrupt them.

- Respond – Execute a memory query using the argument and execute a tell task.

- Request – Write a memory to a bot with a request tag, meaning they should execute the specified task with the arguments.

- Ask – Request a respond task from a bot with a memory query.

- Converse – Iteratively ask and respond between two bots, with some “boredom” break condition between the two bots.

bool Task::tell(World &world, Population &population, Audio &audio, State &_args){

if(initFlag){

//Write to the bots shortterm memory

population.bots[_args.target].addSound(_args);

//Do an interrupt (persistently)

Task interrupt("Interrupt Bot", _args.target, &Task::interrupt);

interrupt.args = _args;

queue.push_back(interrupt);

}

return handleQueue(world, population, audio);

}Currently, only the tell task has been implemented, but it works fine and bots have successfully written their memory to another bot’s memory.

The exact form of the request task in context of the decision making system is still being considered currently. In general the decision making and communication system will be quite tightly coupled, and will be the focus of later improvements, once the world has sufficient content to interact with.

Simulation Control and Feedback

The addition of DearImgui in the new engine allows for very nice debugging tools that were previously not possible.

An interface has been constructed for displaying the contents of a bots memory, their current task queue and their inventory, as well as simple constructor GUIs for changing these.

The interface gives important debugging information, such as their position and internal state this way.

For instance, as the bot moves around and looks, I can take a look at the internal memory state to find out if everything is working the way I want it to.

The ability to construct tasks for a bot live in-simulation is on the most significant improvements for this update. Using the “Add Task” GUI, their behavior can be directly manipulated and tested.

Together with the picker, which selects a position in world-space and passes it to the task constructor, this is even easier and allows for point-and-click task construction.

This really drives the simulation interactivity. Perhaps using hotkeys in the future assigning tasks could be even more user friendly, but I need to consider this.

Finally, the interface allows for interruption and killing of bots (simply by setting the flags).

Resource Hierarchy

I decided that bots needed something interesting to interact with in their world, so I decided to start designing the concept of a resource hierarchy. Using a number of item and inventory management tasks, they are able to harvest, convert and use resources in game.

I constructed an Item object which maintains a number of tags (via enumerators) that will be useful for their behavior in the future, when I write procedural items.

//...

typedef std::vector<Item> Inventory;

class Item{

public:

//Item Information

std::string name;

ItemType _type;

int variant;

int quantity;

glm::vec3 pos;

//For Rendering

Sprite sprite;

void setupSprite();

//Generate Item from Block

bool fromTable(BlockType _block);

//...The state objects that are passed as arguments to tasks and stored in bot memory now also contain an inventory to items, so bots can associate items with other information and use items to parameterize tasks.

The tasks relating to the resource hierarchy then mostly have to do with the manipulation of items and editing blocks in the world data.

//Item Management Tasks

bool seek(World &world, Population &population, Audio &audio, State &_args);

bool destroy(World &world, Population &population, Audio &audio, State &_args);

bool place(World &world, Population &population, Audio &audio, State &_args);

bool build(World &world, Population &population, Audio &audio, State &_args);

bool take(World &world, Population &population, Audio &audio, State &_args);

bool convert(World &world, Population &population, Audio &audio, State &_args);All of these with the exception of convert are implemented.

These tasks are simple on the surface, but require lots of error handling because they deal directly with the world memory.

The destroy task for instance ensures that all required items are present in the bot’s inventory, we aren’t destroying air, we are within range, etc.

Block destruction times are again given by the associated animation and setting the number of frames.

For instance, for chopping wood, the axe-chop animation only has 4 frames in theory, but I can set the frame number to 24 so the animation must be repeated 6 times before the block breaks (see video below).

The take task will pickup items at a location if within range. If items are passed to the task by argument, then bots will only attempt to pickup matching items.

The seek task will recursively query the memory for matches to the argument, and will have the bot walk to those locations until they find what they are looking for. If no memories are found, they do a random walk and look.

By applying seek, destroy and take together, bots can autonomously harvest resources. I built a basic scheme in the dummy task as an example, using argument passing.

//Special Functions

bool Task::Dummy(World &world, Population &population, Audio &audio, State &_args){

if(initFlag){

Task seek("Seek Clay", botID, &Task::seek);

seek.args.block = BLOCK_CLAY;

seek.args.range = population.bots[botID].range;

//Use the outputs from the previous task for these tasks.

Task destroy("Collect Clay", botID, &Task::destroy);

destroy.sound = SOUND_HIT;

destroy.pass = true;

destroy.animation = 3; //Set the Task's Animation

Task take("Take Clay", botID, &Task::take);

take.pass = true;

//Add them to the queue

queue.push_back(take);

queue.push_back(destroy);

queue.push_back(seek);

}

return handleQueue(world, population, audio);

}Argument passing is useful here because the seek task itself has a sub-queue (looking, walking, etc.), and when it finds an item, it will have the position where it found it (and then walked to) in its argument before returning true and terminating. This way, when the seek task is successful, the destroy and take class can blindly adopt whatever the seek argument is and use it as input.

This is what it looks when a bot runs around does this:

The edge case handling on these tasks is not quite perfect yet, and I’m ironing out the kinks.

For instance, if bots have large view-distances, they occasionally see unreachable targets and try to access them but fail. The entire task-queue repeats, but when they look again, the large view distance means many additions to the memory, and their vision isn’t filtered first or their memory queue is too small to hold everything, they will forget that the position they just saw was unreachable, making them get stuck.

This is fixed now (I think) by raising the size of their memory and sorting the choice of block which to go towards in memory by proximity to the bot.

“Place” is a more simple task and currently simply overwrites whatever block is present with the block specified in the argument. Also, placement is currently not associated with a resource cost from the bot’s inventory. This will be adapted in the future, and is currently done this way due to strange memory bugs.

One of the more fun things I can do by combining blueprints and the place task is to let bots autonomously construct buildings. A basic build task looks like this:

bool Task::build(World &world, Population &population, Audio &audio, State &_args){

//Multiple Placement Tasks after designing an appropriate blueprint!

if(initFlag){

//Define some blueprint...

Blueprint _house;

_house.hut(); //Choose which guy to build

_house = _house.translate(_args.pos + glm::vec3(0, 1, 0)); //Translate into worldspace and sort

std::sort(_house.editBuffer.begin(), _house.editBuffer.end(),

[](const bufferObject& a, const bufferObject& b) {

if(a.pos.y < b.pos.y) return true;

if(a.pos.y > b.pos.y) return false;

//Rest doesn't matter.

return false;

});

//Building Tasks

Task walk("Walk to construction site.", botID, &Task::walk);

Task destroy("Destroy occupying block.", botID, &Task::destroy);

Task place("Construct Building.", botID, &Task::place);

//Generate a series of walking and building tasks.

while(!_house.editBuffer.empty()){

//Walk to the position...

walk.args.pos = _house.editBuffer.back().pos;

walk.args.range = population.bots[botID].range;

destroy.args.pos = _house.editBuffer.back().pos;

place.args.pos = _house.editBuffer.back().pos;

place.args.block = _house.editBuffer.back().type;

BlockType _cur = world.getBlock(_house.editBuffer.back().pos);

//The required block is already present

if(_house.editBuffer.back().type == _cur){

_house.editBuffer.pop_back();

continue;

}

//Check if a block needs to be destroyed...

if(_house.editBuffer.back().type == BLOCK_AIR){

queue.push_back(destroy);

queue.push_back(walk);

_house.editBuffer.pop_back();

continue;

}

//Otherwise, we need to simply straight up replace the block.

queue.push_back(place);

queue.push_back(walk);

_house.editBuffer.pop_back();

}

}

//Handle the constructed taskQueue

return handleQueue(world, population, audio);

}

Where I generate a blueprint, sort the editBuffer from bottom to top, and just let the bot build it, while having him check if he needs to remove blocks that are in the way.

… and that gives this awesome result:

The reason the place task overwrites simply is because destroying a block first and placing a new block without evaluating the blueprint in-between (i.e. editing the world’s change blueprint) leads to two blocks at the same position in the world’s editBuffer and thereby buggy behavior which gives a memory leak and a sharp performance dip as soon as that happens.

A possible solution is to check if the position in the editBuffer exists before adding it, but that would mean iterating over the whole thing before making a single addition, so I am not sure how I want to approach that just yet. Since I already do sorting routines frequently, maybe I can find a sort that removes duplicates under some equality condition.

With a method to procedurally generate the blueprints, and then generate a material cost by iterating over the contents of the editBuffer, bots could design their own buildings and harvest the resources to construct them!

Next Steps and Final Words

The past few months have definitely had their ups and downs – often I found myself hitting a wall with this project, having many ideas but struggling to find a place to begin.

The sporadic elements that I have added to the simulation so far will probably be expanded by more sporadic elements, but overall I am trying to keep everything “systematized” as much as possible.

Many things are still not implemented, but proper systems design takes quite a long time. Many systems also simply rely on the existence of other systems to be not only implementable but “interesting”.

Here are some thoughts for interesting additions that I might work on in the near future:

- Finish the Communication Task Primitives

- Add more interesting world generation

- Add vegetation and other fauna with primitive dummy-behavior tasks (needs artwork and porting of the climate system)

- Port my building generator from python to C++ and use it to generate blueprints (edit: done)

- Bot customization (skins, personalities, etc.)

- Bot group structures and corresponding tasks

- Task delegation between bots and general driving forces for behavior

I realize this was an extremely long format blog post, and in the future I hope I can write smaller bits about the progress of various subsystems, instead of rolling out all these significant changes at once.

If you still want a more detailed breakdown of the exact features / implementation (i.e. how you can use a similar system for your game AI) and not just these broad strokes, you should read the git commit log or contact me.

Note: The full source-code for this project is available here [source].

Here’s a video of the follow task, in which a bot just perpetually tries to move within range of another bot.

Also, here is a video of a recursive cactus harvest: